Splunk Indexed Data Backup and Archive

LVM snapshots and AWS EBS snapshots are complimentary technologies that ensure reliable backups of Splunk configurations and indexed data, including hot buckets. This post describes how these technologies were used in a recent project to implement a data protection strategy.

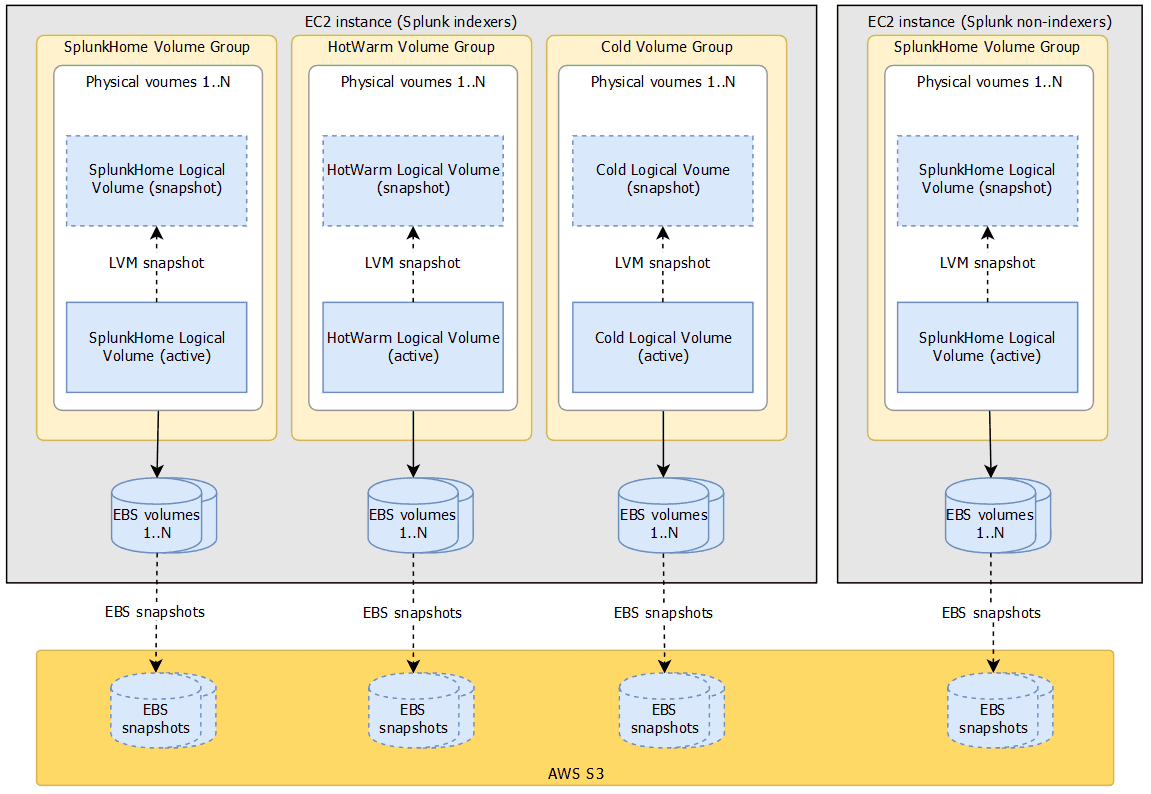

Logical Volume Manager (LVM) snapshots and Elastic Block Storage (EBS) snapshots are complimentary technologies used to ensure volume consistent backups of the Splunk home and index (consisting of hot/warm and cold buckets) volumes. An LVM snapshot automatically flushes all cached writes and pauses new writes while the snapshot is being initialised. Typically this is a very short pause of a few seconds.

LVM snapshot performs "copy on write" (COW) to maintain the state of the source volume at the point of snapshot which means that before any new writes to the volume is committed, LVM will first copy the original block to the snapshot volume. This is why an LVM snapshot is fast and initially takes no storage space. However this means that the longer the snapshot is active, the more volume changes there will be which require disk space in the snapshot volume.

LVM snapshots themselves are not suitable as a backup strategy. Instead, they are used to generate a volume consistent image which can then be backed up using EBS snapshots. Once the LVM snapshots have been created, EBS snapshots of all the underlying EBS volumes that comprise the LVM volume group are created immediately after.

LVM snapshots also introduce a performance overhead in IO latency. This is due to sequencing the original block copy to the snapshot volume for disk writes and then writing the new data. For these reasons, snapshots of all EBS volumes participating in the LVM volume group must be must be taken at roughly the same time in order to minimise the above effects.

Whilst an LVM volume group including the snapshot may span several EBS volumes, as long as snapshots of all EBS volumes have been taken, the LVM snapshot is guaranteed to be consistent once all the participating EBS snapshots have been restored.

As soon as the EBS snapshots have been completed, the LVM snapshots are removed. EBS snapshots do not freeze the filesystem but since the LVM snapshot volumes have not been mounted there are no IO activity and hence no risk of data integrity loss. The same cannot be said of the active volumes in use while the EBS snapshots were taken, however that is acceptable since it is only the integrity of the snapshot volume that is key.

Further, since EBS snapshots are incremental they minimise space usage and costs.

Finally, EBS snapshots are stored in S3. S3 affords durability and are cost efficient storage for backups and archives.

High level relationships between LVM (volume groups, physical volumes, logical volumes, volume snapshots) and EBS (volumes and snapshots) is shown below.

Refer to this post for implementation details

Archiving

As the data in Splunk indexes age according to the configured retirement policy, it will eventually reach the final frozen stage where Splunk by default deletes frozen buckets. Since the solution requirement is to archive data instead, the project employs AWS S3 for long term archiving of frozen buckets.

Data in Splunk buckets are frozen according to the following conditions and is configured per index:

- age of the data exceeds the configured number of seconds

- size of the index exceeds its configured size

When Splunk freezes old buckets a script is called to upload the bucket to AWS S3. The script is executed by Splunk Indexer instances when any of the above conditions are true.

# indexes.conf

[<index_name>]

frozenTimePeriodInSecs = <time_in_seconds>

maxTotalDataSizeMB = <size_in_mb>

coldToFrozenScript = /opt/splunk/etc/slave-apps/cold-to-frozen-s3/bin/coldToFrozenS3.pyThe script is available as a Splunk add on developed by Atlassian

We have enhanced the script to accept settings via configuration file and distinct upload paths per indexer.

Splunk passes bucket details as a parameter when invoking the script and spawns sub processes to handle the uploads.

The script uploads data to S3 bucket location s3://<bucket-name>/<ec2-instance-id>/<index_name>/<bucket_id>.

#!/usr/bin/env python3

import sys

import os

import subprocess

import configparser

from urllib.request import Request, urlopen

from urllib.error import URLError, HTTPError

# Global variables

AWSCLI = "/usr/bin/aws"

class ColdToFrozenS3Error(Exception):

pass

def load_conf():

""" load and parse conf """

# TODO: error handling for file open exceptions

config = configparser.ConfigParser()

config.read(os.path.join(os.path.abspath(

os.path.dirname(__file__)),

'..',

'local',

'cold_to_frozen_s3.conf'))

_conf = {}

# TODO: error handling for null or empty string

_conf['region'] = config['atlassian_cold_to_frozen_s3']['region']

_conf['bucket_name'] = config['atlassian_cold_to_frozen_s3']['bucket_name']

# no_proxy to locallink addresses for EC2 metadata

try:

no_proxy = os.environ['no_proxy'].split(',')

no_proxy.append('169.254.169.254')

os.environ['no_proxy'] = ','.join(no_proxy)

except KeyError:

pass

ec2metadata = Request('http://169.254.169.254/latest/meta-data/instance-id')

try:

response = urlopen(ec2metadata)

except URLError as e:

if hasattr(e, 'reason'):

print('Error contacting server: ', e.reason)

elif hasattr(e, 'code'):

print('The server returned error:: ', e.code)

else:

_conf['instanceid'] = response.read().decode()

return _conf

def main():

""" main """

if len(sys.argv) < 2:

sys.exit('usage: python coldToFrozenS3.py <bucket_dir_to_archive>')

colddb = sys.argv[1]

if colddb.endswith('/'):

colddb = colddb[:-1]

if not os.path.isdir(colddb):

sys.exit("Provided path is not directory: " + colddb)

rawdir = os.path.join(colddb, 'rawdata')

if not os.path.isdir(rawdir):

sys.exit("No rawdata directory, this is probably not an index database: " + colddb)

# ok, we have a path like this: /SPLUNK/DB/PATH/$INDEX/colddb/db_1452411618_1452411078_1403

# and we want it to end up like this:

# s3://BUCKETNAME/instance-id/$INDEX/db_1452411618_1452411078_1403

s3conf = load_conf()

s3bucket = s3conf['bucket_name']

region = s3conf['region']

instanceid = s3conf['instanceid']

segments = colddb.rsplit('/', 3)

if len(segments) != 4:

sys.exit("Path broke into incorrect segments: " + segments)

# should be like: ['/SPLUNK/DB/PATH', '$INDEX', 'colddb', 'db_1452411618_1452411078_1403']

remotepath = 's3://{s3bucket}/{instanceid}/{index}/{splunkbucket}'.format(

s3bucket=s3bucket,

instanceid=instanceid,

index=segments[1],

splunkbucket=segments[3])

s3args = 'sync ' + colddb + ' ' + remotepath

awscliopts = ''

if region:

awscliopts = " --region {region}".format(region=region)

command = AWSCLI + awscliopts + ' s3 ' + s3args

command = command.split(' ')

try:

del os.environ['LD_LIBRARY_PATH']

del os.environ['PYTHONPATH']

except KeyError:

pass

try:

# Benchmarked our indexers being able to upload 10GB to S3 in us-west-2 usualy ~5mins

# We kill uploads taking longer than 15 minutes to free up Splunk worker slots

awscli = subprocess.check_call(

command,

stdout=sys.stdout,

stderr=sys.stderr,

timeout=900)

except subprocess.TimeoutExpired:

raise ColdToFrozenS3Error("S3 upload timedout and was killed")

except:

raise ColdToFrozenS3Error("Failed executing AWS CLI")

print('Froze {0} OK'.format(sys.argv[1]))

load_conf()

main()Configure S3 details in the local conf file on the index cluster master

# /opt/splunk/etc/master-apps/cold-to-frozen-s3/local/cold_to_frozen_s3.conf

[atlassian_cold_to_frozen_s3]

region = ap-southeast-2

bucket_name = uuid-splunk-frozen-bucketEnsure that the Splunk index cluster nodes are launched with an instance profile that allows writing to the S3 bucket.

Finally, complete the archival strategy by using S3 lifecycle policies:

- to migrate data to cheaper storage after a period of time, then

- transition to Glacier after a period of time, then

- to be deleted after 7 years

Due to the growing volumes of data that can be archived using S3 lifecycles policies is an effective cost management strategy.